AI Ready Graduates: Innopharma Education’s Approach to Generative AI in Education

Author: Colm O’Connor, College Librarian & Research Specialist, Innopharma Education

Generative AI is now a core part of how knowledge work happens, and higher education has to decide whether to ignore it, ban it, or teach it well. Innopharma Education’s Generative AI Policy and AI Assessment Scale (AIAS) deliberately choose the last option: teach AI well while keeping academic integrity non-negotiable.

What is Generative AI in Education?

Generative AI in higher education refers to artificial intelligence systems that can produce text, data analysis, images, or code in response to prompts. Within an academic context, this includes tools that support drafting, summarising research, generating ideas, and analysing information. For learners heading into data rich, highly regulated sectors like pharmaceuticals and biopharma, this is not a passing trend but a fundamental shift in how work is done. The landscape of higher education is therefore evolving alongside industry expectations. The policy recognises that where AI is already used in industry, graduates must be prepared to engage with relevant tools and practices appropriately.

Innopharma Education therefore positions AI not as a shortcut, but as a literacy graduates will need alongside scientific, regulatory, critical thinking and digital skills. Students are expected to learn how to prompt, critique, verify, and correctly attribute AI-assisted work rather than pretending these tools do not exist.

Pros: Opportunities of Generative AI

Used well, generative AI offers clear educational benefits:

- It keeps teaching and assessment current and relevant, reflecting the tools graduates will actually meet in pharma and related industries.

- It can scaffold learning by helping with brainstorming, structuring assignments, summarising background sources and providing language support, which is especially helpful for learners who struggle with academic writing.

- In more advanced tasks, AI output becomes something to critique, validate, and refine, strengthening critical thinking and professional judgement rather than replacing it. Critical thinking is actively taught at Innopharma Education, embedded across our modules and supported by guides that learners can use independently to develop this essential skill.

Framed this way, AI becomes a tool to think with, not a machine that thinks for the learner.

Cons: Risks and the Need for a Usage Policy

The same capabilities that make AI powerful also create real risks if left unmanaged:

- Academic misconduct: AI can generate entire assignments, weakening the link between assessment and genuine competence.

- Hallucinations and inaccuracy: tools can produce confident but false information, which is particularly dangerous in scientific and clinical contexts.

- Copyright and plagiarism: AI may reproduce protected text or images, creating plagiarism and IP risks if copied uncritically.

- Opaque data, bias, privacy and environmental impact: these raise ethical questions that learners must be taught to recognise and evaluate.

Innopharma Education’s policy keeps existing Academic Misconduct provisions in force for AI-related misuse and emphasises that accountability always rests with the learner, not the tool. It also explicitly asks staff to remind students to verify output, minimise overreliance on AI-generated content, consider environmental costs and evaluate ethical implications before acting.

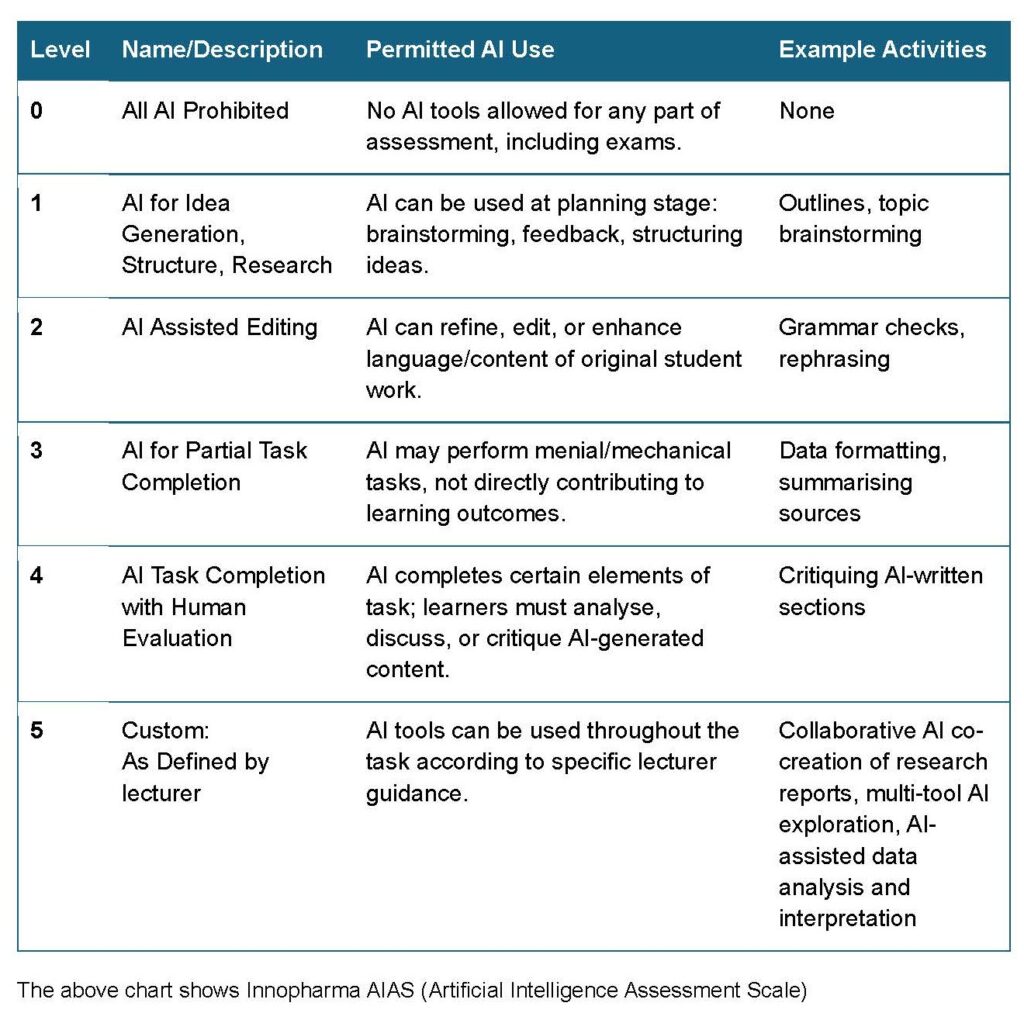

The AI Assessment Scale: Structured, Transparent AI use

To balance innovation with integrity, Innopharma Education has adopted and adapted the Artificial Intelligence Assessment Scale (AIAS). The scale offers six clearly defined levels, from 0 to 5, and none of them are cumulative – lecturers choose exactly what is allowed for each task rather than moving up a ladder by default.

Using generative AI effectively in higher education requires more than technical access. It requires research skills development, critical evaluation of outputs, and transparent disclosure where required. Lecturers must explicitly state which levels are permitted in each assignment; anything not explicitly allowed is treated as prohibited. This gives students clarity on acceptable use while still allowing for creativity where it is pedagogically justified.

The Power of the Custom Level

At Level 5 on the scale, Innopharma Education’s approach becomes genuinely flexible and future‑focused. Generative AI can be embedded throughout an assessment—as a creative partner, data‑analysis assistant, or simulation tool—so long as its use is clearly defined and ethically framed. Typical activities include co‑creating reports, exploring multiple AI tools and styles, using AI for data analysis or interpretation, and generating visuals for posters or biopharmaceutical processes. This is not an “anything goes” level: it is used when AI is integral to the learning outcomes or when excluding AI is not essential to what is being assessed.

Lecturers retain independence to decide when AI should be central or limited, which tools are permitted, and what documentation is required, allowing very different AI profiles across modules. For students, this means practising authentic, industry‑relevant AI use; for staff, it offers a clear framework that safeguards academic integrity without constraining good pedagogy.

Ultimately, here at Innopharma Education we are not simply tolerating generative AI, but intentionally harnessing it to prepare students for the realities of modern work. Our goal is clear: graduates who are confident, Aliterate professionals, and qualifications whose value is protected by robust, integrity first assessment.